Lustre Isolation vs Multi-Tenancy: What’s the Difference?

The idea of multi-tenancy is to provide isolated namespaces from a single file system. So let’s consider multi-tenancy is the general concept, and isolation the Lustre implementation.

Lustre Isolation enables different populations of users on the same file system. Those tenants share the same file system, but they are isolated from each other: they cannot access or even see each other’s files, and are not aware that they are sharing common file system resources.

Implementing Lustre Isolation: Step-by-Step Guide

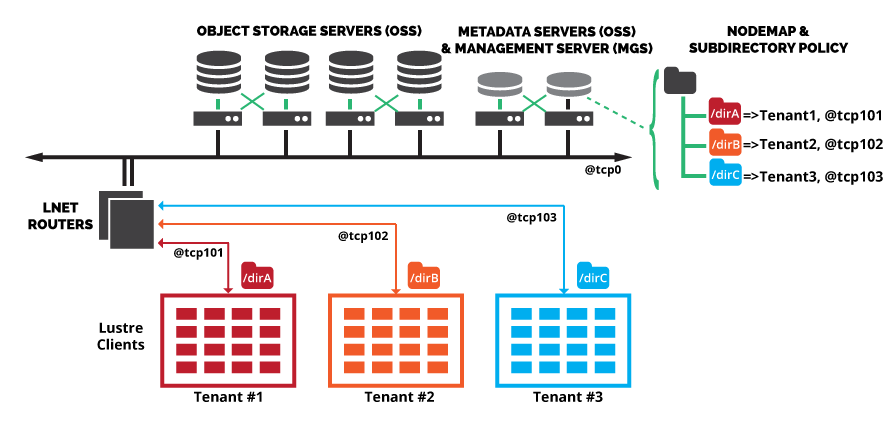

The Lustre Isolation feature will be available from EXAScaler 4. To implement Lustre Isolation, we combine two Lustre features:

- subdirectory mount;

- nodemap.

Subdirectory mount, as the name suggests, is the ability to mount only a subdirectory residing on Lustre, not the Lustre root directory. This subdirectory is called a fileset, and mounting a fileset does not expose the whole Lustre namespace, but a fraction of it. In order to achieve multi-tenancy, the subdirectory mount, which presents to tenants only their own fileset, has to be imposed to the clients. To that extent, we make use of the nodemap feature. We group all clients used by a tenant under a common nodemap entry, and we assign to this nodemap entry the fileset to which the tenant is restricted.

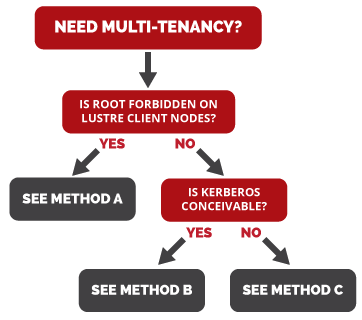

In the end, enforcing multi-tenancy on Lustre narrows down to the ability to properly identify the client nodes used by a tenant, and trust those identities. Depending on the customer’s infrastructure and workflow, there are various ways to achieve this:

Method A

In case users cannot be root on Lustre clients, we can safely assume that they are not able to change the Network IDentification (NID) of the Lustre clients. Then multi-tenancy will be guaranteed by subdirectory mount and nodemap features, provided we know at all time which client nodes are used by which tenants. It can change over time, but nodemap entries have to be updated accordingly, and Lustre clients remounted to take the new definitions into account.

The Challenge: When Users Have Root Access on Lustre Clients

In case users can be root on Lustre clients, it means customer’s workflow involves mechanisms such as Virtual Machines or Linux Containers, and that the Lustre clients are running inside the VMs or containers. The first issue to tackle is to make sure root user is mapped to a regular user, thanks to the nodemap. In this kind of workflow, the advantage is that dedicated NIDs can be dynamically assigned to the set of clients used by a given tenant. But the drawback is that a malicious user could change the NID of the Lustre clients, making their identification untrustworthy.

Method B

If Kerberos is possible in the user’s infrastructure, then it can be used as the method to authenticate Lustre clients. Even if a client NID is maliciously modified, it will not match the Kerberos credentials installed in the VM or container, hence forbidding the communication from this client to the Lustre servers.

Method C

If Kerberos is not an option, the way to protect from malicious modification of client NIDs is to put Lustre routers, inaccessible to users, on the path between clients and servers, and assign dedicated LNet networks to tenants. Indeed, if a client NID is modified, the router will not let the traffic through. In addition to making client identification trustworthy, it eases nodemap configuration.

Assessing the Performance Impact of Lustre Isolation

Lustre Isolation feature in itself does not generate any penalty on Lustre bandwidth or metadata performance. Indeed, tenancy arbitration is carried out at client mount time only. The performance impact may come from the method used to ensure clients identification is reliable.

Want to learn about the latest ExaScaler developments and features? Visit our solutions page!